Using whisper to transcribe

The following is a quick manual on getting whisper (that what powers open-AI realtime chat) to use as transcription.

It uses https://github.com/ggerganov/whisper.cpp which works like a charm.

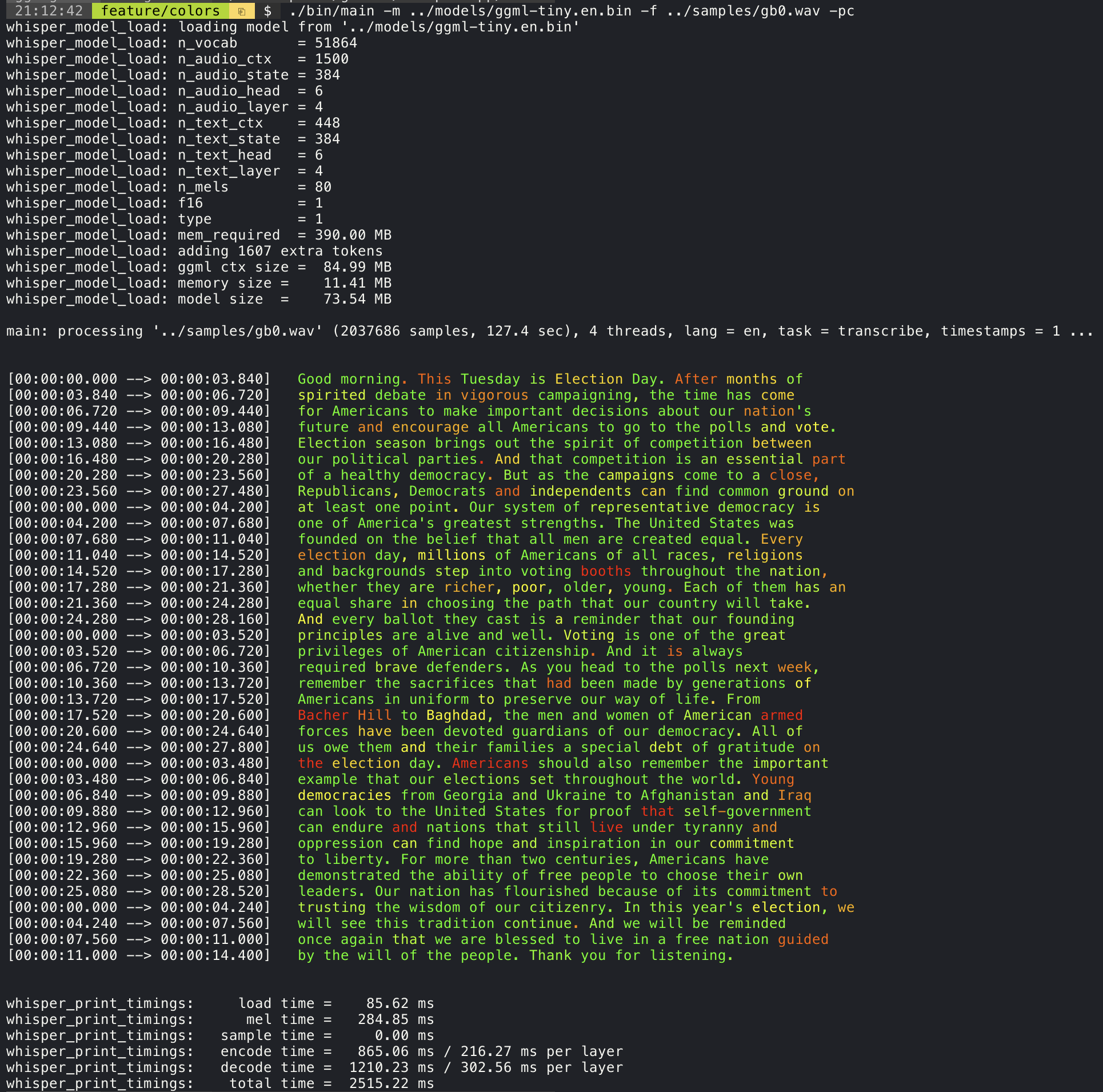

git clone https://github.com/ggerganov/whisper.cpp.git ~/repos/whisper.cpp

cd ~/repos/whisper.cpp

# download sample

bash ./models/download-ggml-model.sh base.en

# make & test

make

./main -f samples/jfk.wav

# Get ready for everything else

# Install wget if needed using `brew install wget` on macos

# Clone the OpenAI whisper

git clone https://github.com/openai/whisper ~/repos/whisper/

# Download the latest from open AI

cd ~/Downloads

wget https://openaipublic.azureedge.net/main/whisper/models/e5b1a55b89c1367dacf97e3e19bfd829a01529dbfdeefa8caeb59b3f1b81dadb/large-v3.pt

# Convert it

# Install python3 (using the website, brew is terrible at this)

# pip3 install torch (was missing for me)

cd ~/repos/whisper.cpp

mkdir models/whisper-large

python3 models/convert-pt-to-ggml.py ~/Downloads/large-v3.pt ~/repos/whisper/ ./models/whisper-large

# This should convert it

# Move it to the right place

cp ./models/whisper-large/ggml-model.bin models/ggml-large.bin

# ^^^^^ ^^^^^

# Testing it

./main -m models/ggml-large.bin -f samples/jfk.wav

Prepare the file by converting it to 16khz wav file:

INPUT_FILE="path/to/your/input.file"

ffmpeg -i "$INPUT_FILE" -acodec pcm_s16le -ar 16000 -ac 2 "samples/test.wav"If we want certainty add the -pc

./main -m models/ggml-large.bin -f samples/jfk.wav -pc

# ^^^

By default whisper translates to English if you want to avoid than add the language

./main -m models/ggml-large.bin -f samples/jfk.wav -l nl

# ^^^^^

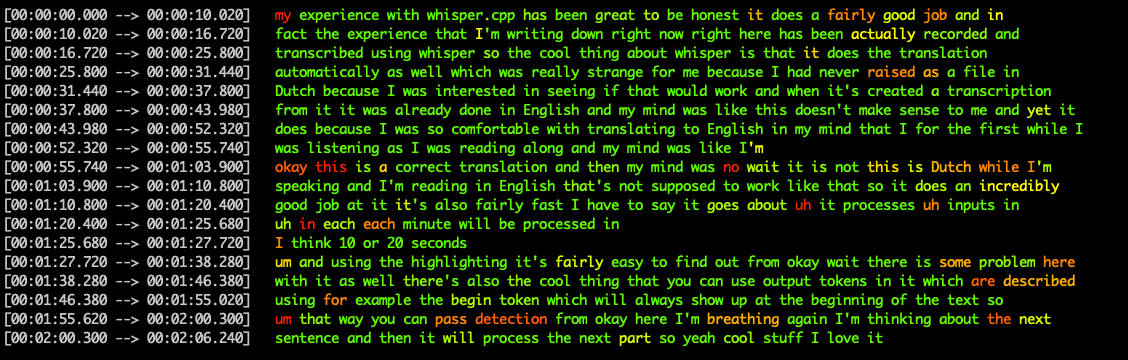

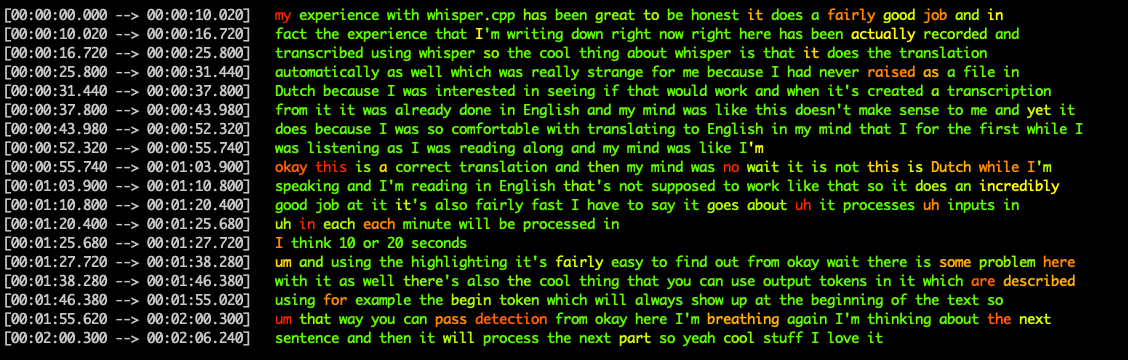

My experience

(I have edited this for readability reasons)

My experience with Whisper.cpp has been great, to be honest. It does a fairly good job, and in fact, the "experience" that I'm writing down right now, right here, has been actually recorded and transcribed using Whisper.

The cool thing about Whisper is that it also does the translation automatically, which was really strange for me because I had yet to realize that the file was narrated in Dutch. I was interested in seeing if it would work. And when it created the transcription from it, it was already done in English. And my mind was like, "This doesn't make sense to me." Yet, it does because I was so comfortable with translating to English in my mind that I was first listening as I was reading along. My mind was like, "Okay. This is a correct transcription." Then my mind was, "No, wait, it is not! This is Dutch. I was speaking, and I'm reading in English! That's not supposed to work like that!"

It does an incredibly good job and is also fairly fast. I have to say it processes each minute in about 10 or 20 seconds.

Using the highlighting makes it fairly easy to find where there is some problem. Also, there's a cool thing about using output tokens. Which describe, for example, the [_BEG_] token which always shows up at the beginning of the text so that way you can detect "Okay, here I'm breathing again, and I'm thinking about the next

sentence," and then it will process the next part.

So yeah. Cool stuff. I love it.